How enterprises can accelerate legacy modernization?

Reasons To Consider Legacy Modernization

Many CIOs acknowledge the need for legacy transformation and a move towards an agile, modern system. Outdated software slows down the business to respond to changing market needs and puts them behind their competition. A lengthy update or an upgrade approach would become a bottleneck and risk to the company’s day to day operations. Today enterprises are looking for an agile and quick digital transformation approach that makes the process simple to modify and quick to adapt. They also have to address the training needs within the organization during such modernization efforts.

Few other factors include

- Better user experience

- Digitization of an offline process

- Out of support software or infrastructure systems

- Limitations in their current technology stack/product

- Enhanced features with better performance

- Data Monetization

- Innovative products/Services

- Ease of integration with other systems

Choosing the right Modernization Approach

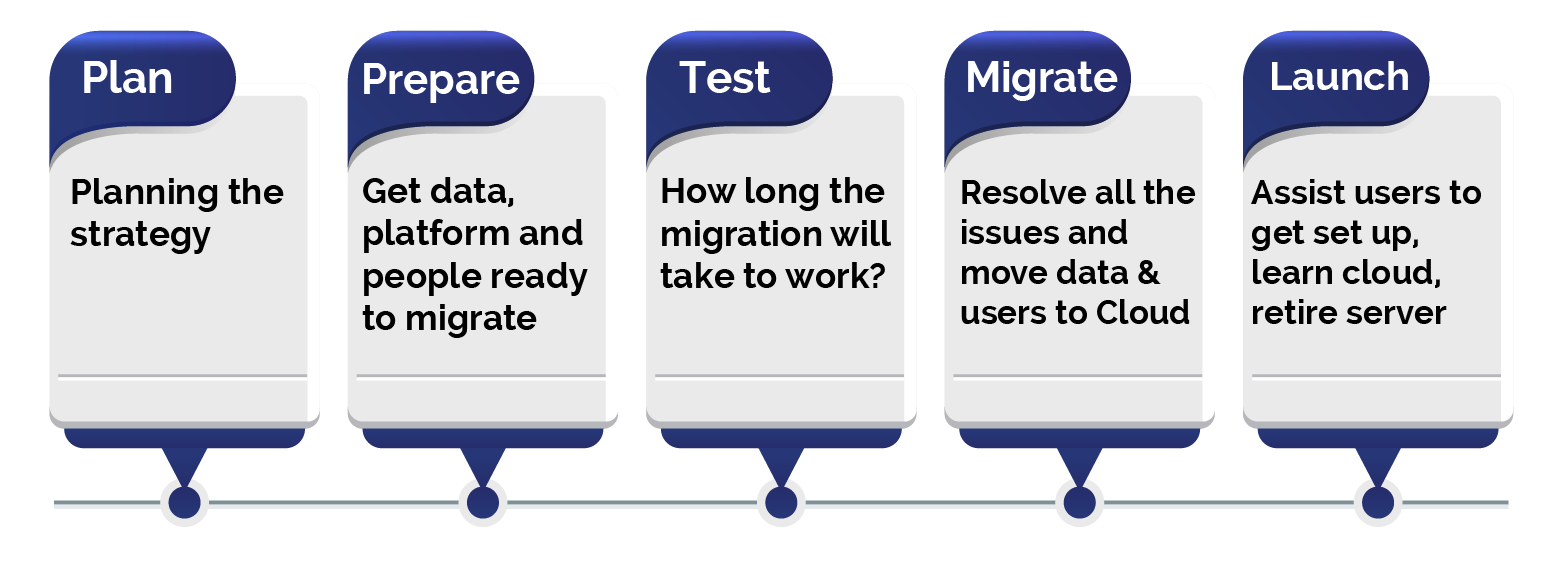

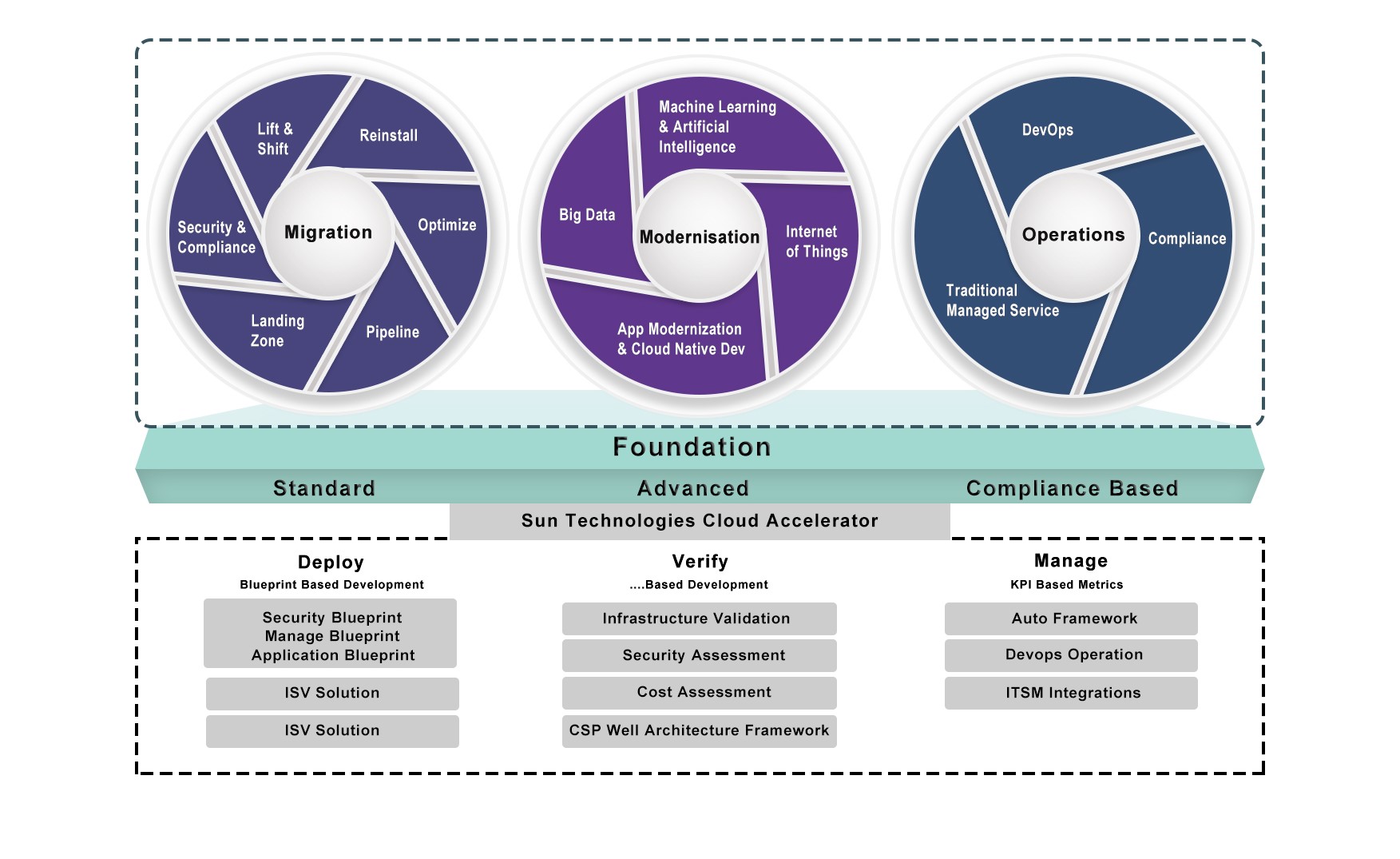

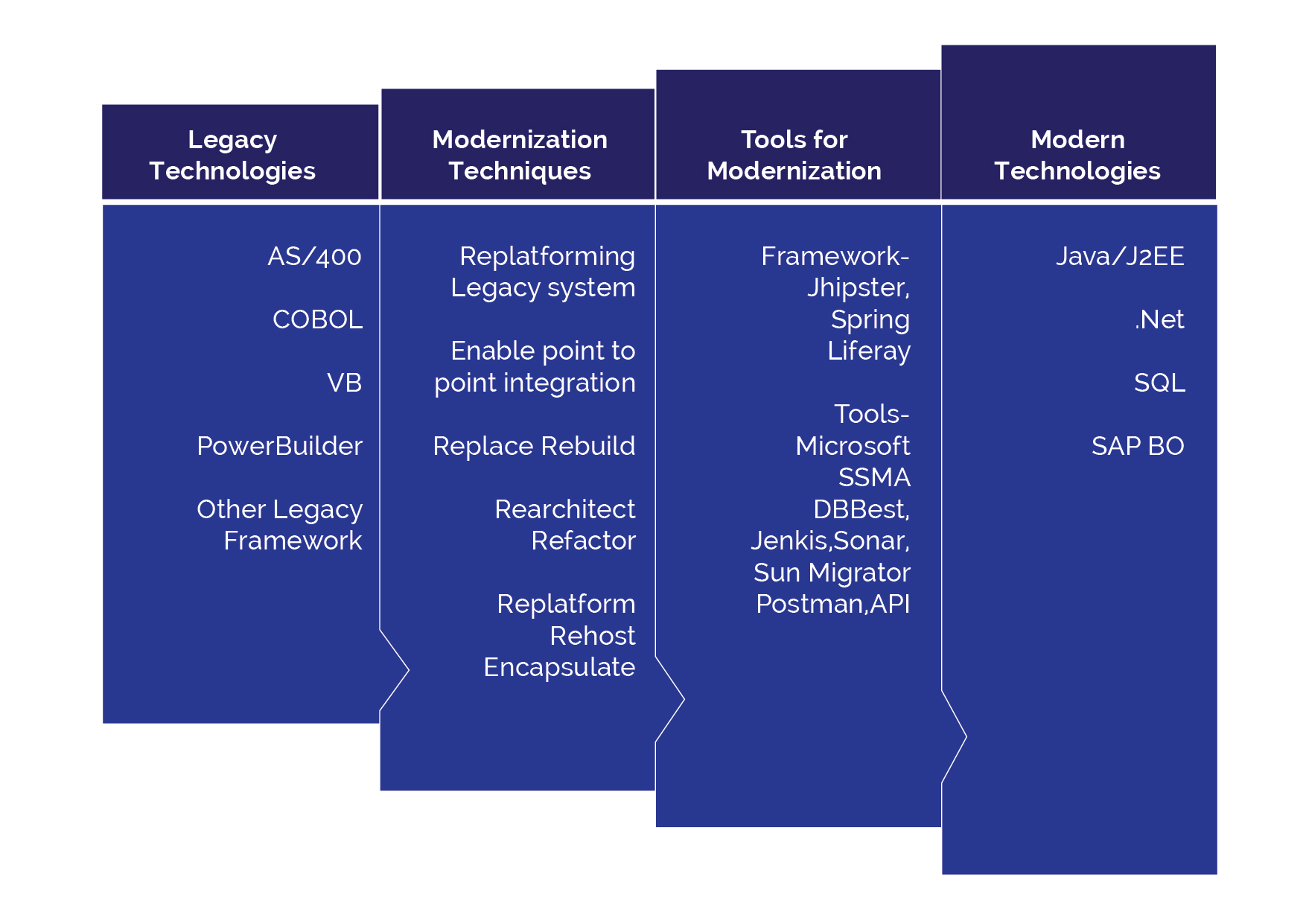

There are many ways to transform legacy systems. Enterprises use their own strategies to achieve legacy modernization. Fully automated migration is an efficient option that uses migration guides, checklist, migration tools and code generators to convert legacy data and code to modernize platforms. It also allows enterprises to tackle legacy modernization without distracting business operations. Refactoring a legacy application reduces the potential risks and enhance IT efficiencies.

Sun Technologies’ unique agile framework enables enterprises to enhance their legacy systems and drive legacy transformation. Our experts build smart workflows for your business processes, make strategic insights, and accelerate your legacy transformation. Our agile framework comprises of a checklist, guide, tools, and framework. The checklist and guide help identify the right approach and provide proper paths to achieve the goal.

Checklist before getting started with legacy modernization

- What is our primary objective in modernizing the legacy systems?

- Are we choosing the right modernization approach?

- Impact Analysis chart

- What are the best practices to follow to accomplish the modernization process?

- What are our next steps for legacy modernization?

- Are there any processes or workflows to be digitized?

- How would the switch over happen?

How does Sun Technologies accelerate legacy modernization?

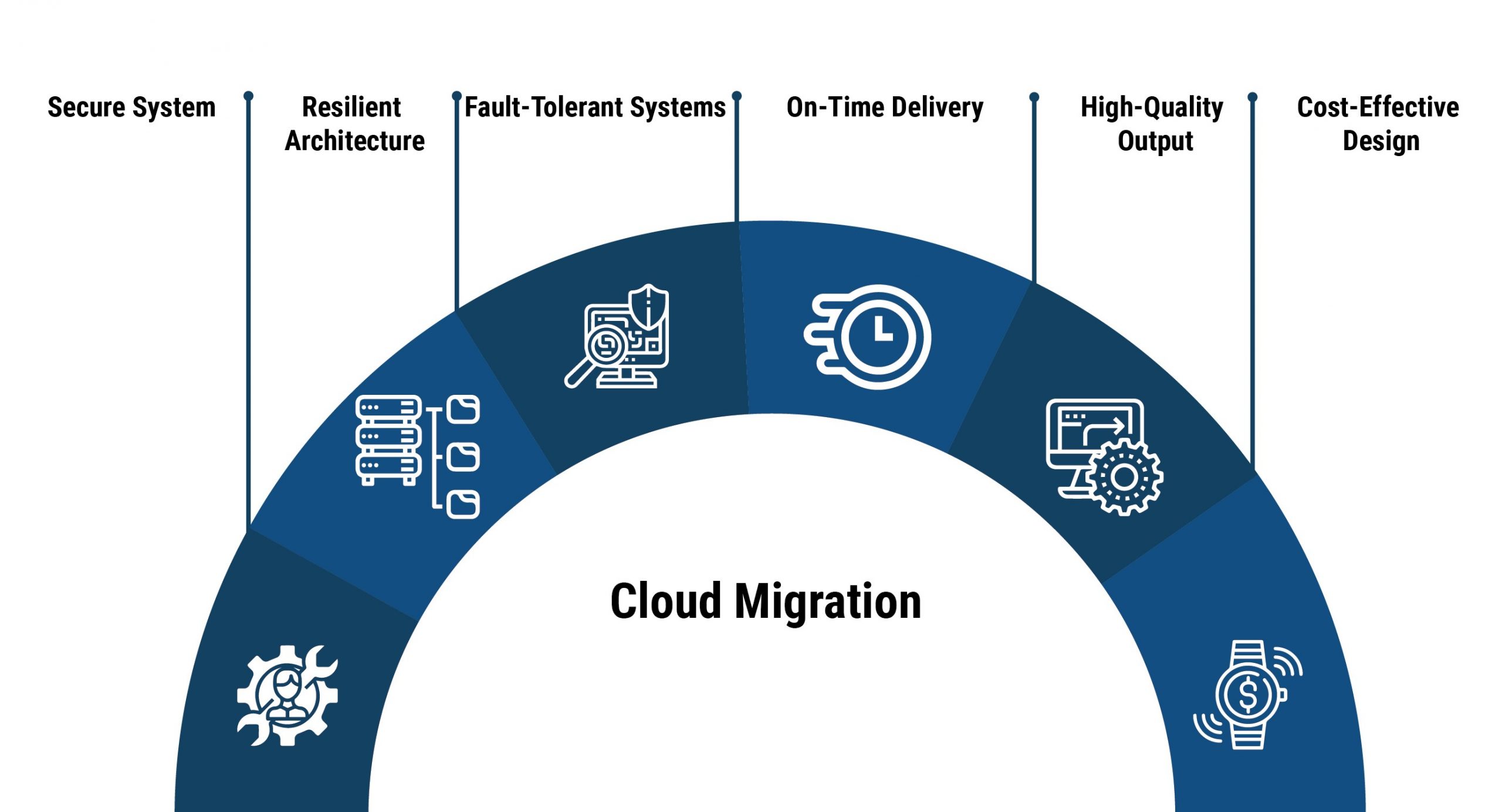

There are several approaches towards accelerating modernization; however, at Sun Technologies, we follow an iterative process to accelerate the legacy transformation that reduces risks while providing the agility to meet the dynamic requirements of the business. We start with the evaluation and assessment of the current state of the legacy systems. Our team of solution architects start with impact analysis of various interdependent systems and prioritize the application stack. We decide and prioritize applications that are important and have low impact and risks for modernization. Our code generator reduces the development time significantly, helping businesses to see quick wins and benefits of legacy transformation efforts. We also have a properly layered tech stack where multiple teams can work together.

Post modernization, we have DevOps tools to guarantee there is no data or functionality loss. Our experts ensure that all dependent upstream and downstream systems work seamlessly through detailed data distribution & validation across multiple integration points. From upgrading platform, database, tech stack, Sun Technologies’ solution experts can make it simple and deliver the desired output. We also ensure we develop a roadmap that reduces the complexities while maximizing the benefits of digital transformation, agile development, UI/UX, and quality assurance.

Resulting System Benefits Include:

- Competitive advantage

- Better user experience

- Future ready business

- Ability to make quick changes

- Faster time to market

- Secure systems

- Better user experiences

- Integrates with a third-party system

Conclusion

Software modernization is dynamic, requires highly skilled resources, and is risky, irrespective of the chosen method and technique. Yet, the findings are valuable to the risk and gives competitive edge to your business.

“Gartner predicts more than half the global economy turns digital by 2023.”

Our legacy modernization solutions streamline business processes from planning to implementation and provide more value by improving operational efficiency, business agility, and ROI. Sun Technologies helps enterprises accelerate legacy modernization through fast, secure, and state of the art modern technologies and code generators. Get in touch with us today!

Tahir Imran

With over 18+ years of experience in Software design and development, Tahir's expertise lies in designing and developing high-quality products and solutions spanning multiple domains. He is versatile and always eager to tackle new problems by constantly researching and deploying emerging techniques, technologies, and applications.

Recent Posts

Interested in Legacy Mordernization?

We deliver result-driven solutions to boost the competency level and productivity.