All-In-One Scriptless Test Automation Solution!

All-In-One Scriptless Test Automation Solution!

Using Banking API Integrations to Build Automated Data Streaming and Workflows

Using APIs for legacy system integration is a proven way to modernize apps and add new functionalities. This will require integrations with messaging middleware most of which are nearing limited or end-of-life support. Banks using these systems may struggle to get timely updates, patches, or support when issues arise. When using legacy messaging middleware, the bank’s IT teams may also find themselves locked into a specific vendor’s ecosystem, making it challenging to switch to more modern and flexible solutions.

Find Answers to Some Key Questions

How to modernize legacy messaging infrastructure using automated data streaming?

How do Banks leverage automated data streaming?

Why enable asynchronous data in banking?

Why leverage Heterogeneous datasets for banking workflows?

Why enable automated underwriting in banking workflows?

What skills are required to enable automated data streaming?

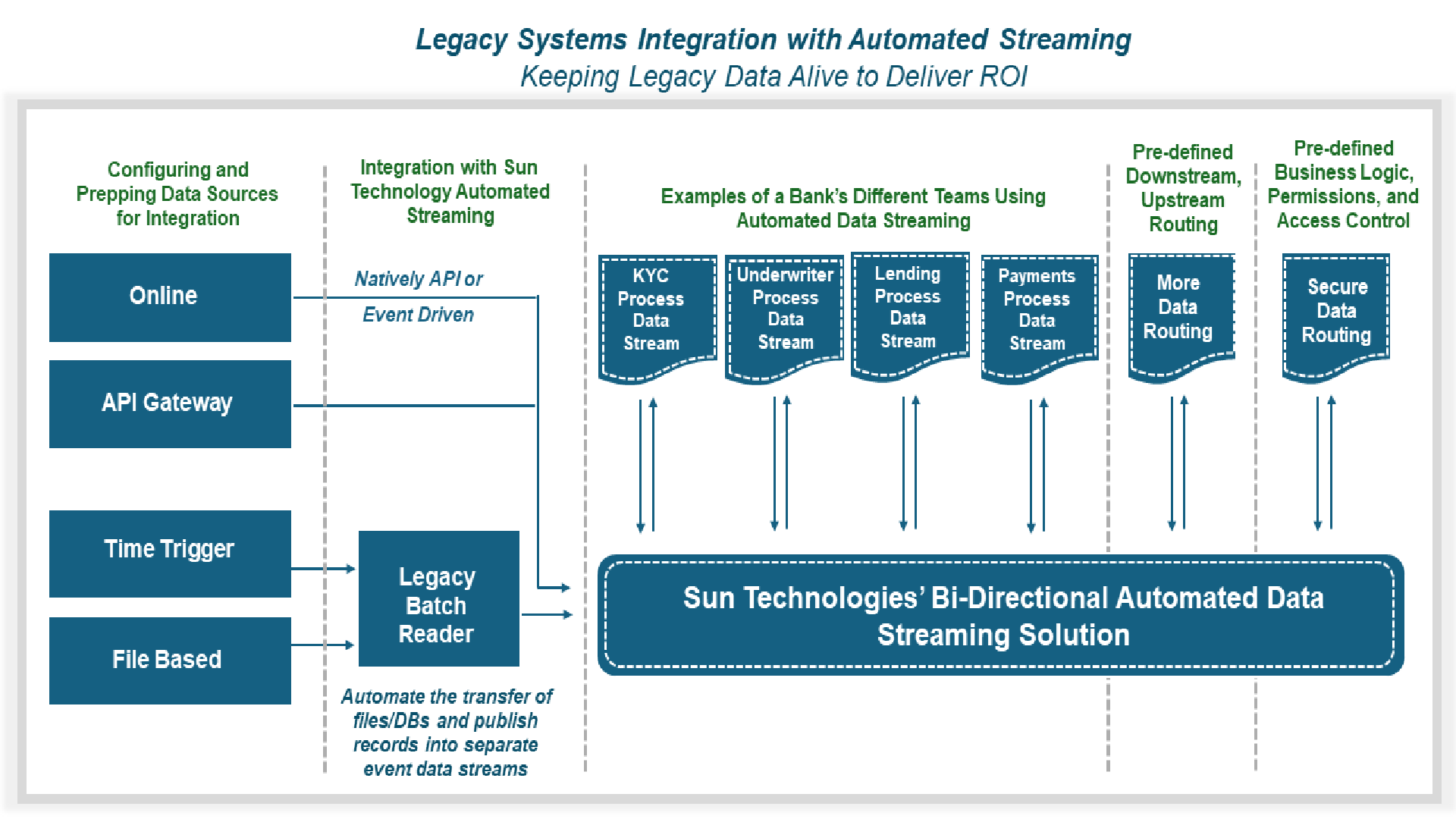

Discover how No-Code API integrations are modernizing Legacy Messaging Infrastructure by integrating it with decoupled Automated Data Streams.

For example, for one of our banking clients, we enabled event driven data streaming for processes involving each of the following: Treasury Management, Lending & Underwriting, Guarantee Management, Information Security, and/or Regulatory Compliance.

Our Legacy Integration specialists are not only helping the bank identify the right data pipelines, but also launching new functionalities using No-Code API plugins.

Scenario: Online Mortgage Lending Platform

Lending and underwriting processes in the financial industry often require asynchronous, heterogeneous datasets, and high-volume throughput to efficiently assess borrower risk, process applications, and make lending decisions.

Asynchronous Processing:

Heterogeneous Datasets:

High Volume Throughput:

Automated Underwriting:

Agile Decision Making:

In this scenario, the online mortgage lending platform demonstrates how asynchronous processing, integration of heterogeneous datasets, and high-volume throughput are essential for efficient lending and underwriting processes.

These technologies and capabilities enable the platform to handle a large number of loan applications, assess borrower risk accurately, automate underwriting decisions, and provide timely notifications to borrowers. This results in improved efficiency, faster loan processing times, reduced manual intervention, and enhanced customer experience in the lending process.

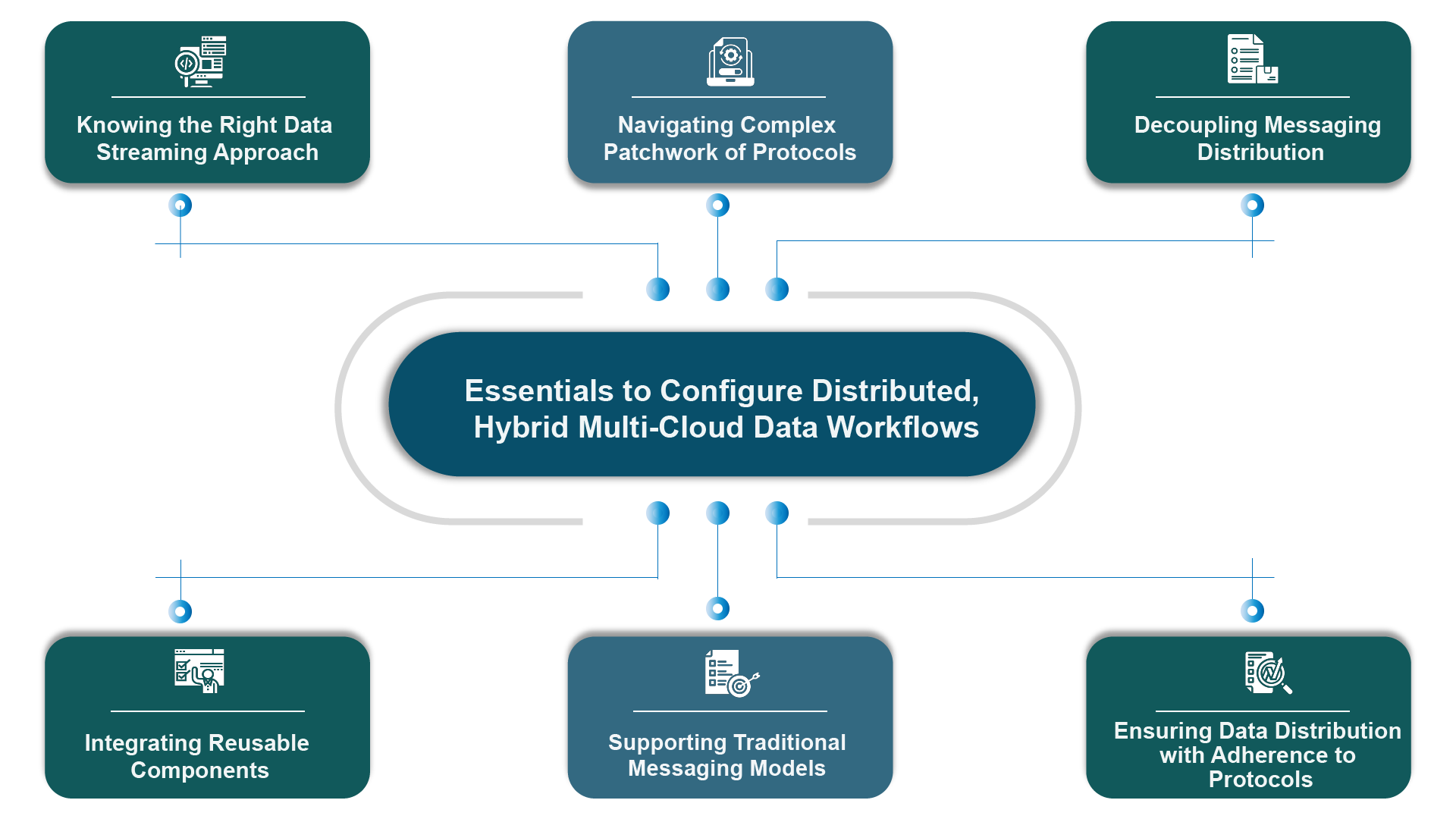

To configure modern streaming that connects with data residing in legacy messaging infrastructure, you need the ability to configure streaming applications such as Kafka and Infrastructure-as-a-Code Tools such as Terraforms. The Terraform tool helps automate resource Virtual machines, Load Balancers and Firewalls while allowing for reuse of codes using a GitHub.

When fixing legacy messaging models, you require specialized knowledge of configuring streaming applications that run in different environments. Different environment means a hybrid cloud or multi-cloud adoption scenario. The modern data streaming architecture needs to be able to connect with legacy hardware, software, tools, and technologies that are on-premise as well as with data that is on the cloud. Therefore, knowledge of hybrid configurations becomes paramount in highly regulated industries such as banking where on-premise infrastructure becomes essential for GDPR compliance.

Knowing the Right Data Streaming Approach

You would need the ability to configure open-source solutions for enabling solutions that can use streaming applications such as JMS, AMQP and even Kafka to leverage Apache Pulsar as the broker infrastructure, without tampering the application code or development model.

Navigating Complex Patchwork of Protocols

This requires the ability to communicate across JMS, Kafka, and AMQP applications all in a single solution (powered by opensource technologies).

Decoupling Messaging Distribution

This means decoupling distribution of messages and streams from the single data distribution architecture or the interface used to access data.

Integrating Reusable Components

The programmer must be adept at placing the code inside a Terraform module and reuse that module in multiple places throughout the application.

Supporting Traditional Messaging Models

Deliver features and functions by leveraging traditional messaging models like JMS and AMQP using a common infrastructure on top of these.

Ensuring Data Distribution with Adherence to Protocols

While upgrading to modern streaming architecture ensure seamless interaction with established protocols and patterns that are critical for operations.

With the growing number of tools and technologies adopted by different teams within the same organization, communication with the legacy middleware poses a massive challenge. While these systems have served their purpose in the past, they come with several limitations, especially when compared to modern messaging solutions.

Here are some common concerns associated with legacy messaging middleware:

Lack of Features:

Legacy messaging middleware may lack the advanced features and functionalities found in modern messaging solutions. This includes features such as real-time analytics, intelligent routing, and support for different message formats and protocols.

High Maintenance Costs:

Maintaining and upgrading legacy messaging middleware can be expensive. Organizations may need to invest in specialized skills and resources to keep these systems running, especially if the original developers or administrators are no longer available.

Integration Challenges:

Integrating legacy messaging systems with newer applications or cloud services can be difficult. These systems may not support modern APIs or standards, requiring custom development workarounds that are time-consuming and costly.

Performance Limitations:

Legacy systems may struggle to handle the speed and volume of messages required for real-time processing. This can lead to delays in message delivery, impacting critical business processes.

Scalability Issues:

Legacy messaging middleware may not be designed to scale horizontally or vertically, limiting its ability to handle increasing message loads or new applications.

Compliance and Security Concerns:

Older messaging systems may not meet current security standards and compliance requirements, exposing organizations to potential data breaches and regulatory penalties.

Difficulty in Adopting New Technologies:

Legacy systems can hinder the adoption of new technologies such as cloud computing, microservices architecture, and containerization. These modern technologies often require messaging systems that are more agile and compatible.

Inflexibility:

Legacy messaging middleware may lack the flexibility to adapt to changing business needs and evolving technologies. This can hinder innovation and slow down the pace of digital transformation initiatives.

Lack of Support for Modern Protocols:

Many legacy messaging middleware solutions do not support modern messaging protocols like MQTT or AMQP, which are essential for IoT (Internet of Things) and real-time data processing applications.

Complexity:

Legacy messaging middleware systems tend to be complex, with intricate configurations and setups. This complexity makes it challenging for developers and administrators to understand, maintain, and troubleshoot these systems.

Limited Scalability:

Legacy systems may not scale well to meet modern requirements for handling large volumes of messages and data. As organizations grow and their messaging needs increase, legacy middleware can become a bottleneck, leading to performance issues.

Outdated Technology:

Legacy messaging middleware often uses outdated technology and protocols that are not as efficient or secure as modern alternatives. This can pose security risks and make it difficult to integrate with newer systems and technologies.

Legacy Code Dependencies:

Over time, applications may become tightly coupled with legacy messaging middleware, making it difficult and risky to replace or upgrade the messaging system without impacting existing functionality.

In summary, while legacy messaging middleware has served organizations well in the past, it poses several challenges in today’s rapidly evolving technology landscape. Organizations looking to stay competitive and meet modern integration and communication needs often find it beneficial to migrate to more modern and flexible messaging solutions. These newer systems offer improved scalability, performance, security, and integration capabilities that are essential for today’s digital businesses.

Use this EBook to know how the world’s top legacy migration specialists are leveraging no-code technologies to enable legacy systems integration and automate data streams.

Qualify for a free consultation on the right application modernization strategy for your enterprise.