All-In-One Scriptless Test Automation Solution!

All-In-One Scriptless Test Automation Solution!

Why You Need DevOps Engineers and Cloud Architects to Configure Automated Streaming Workflows in a Multi-Cloud Environment?

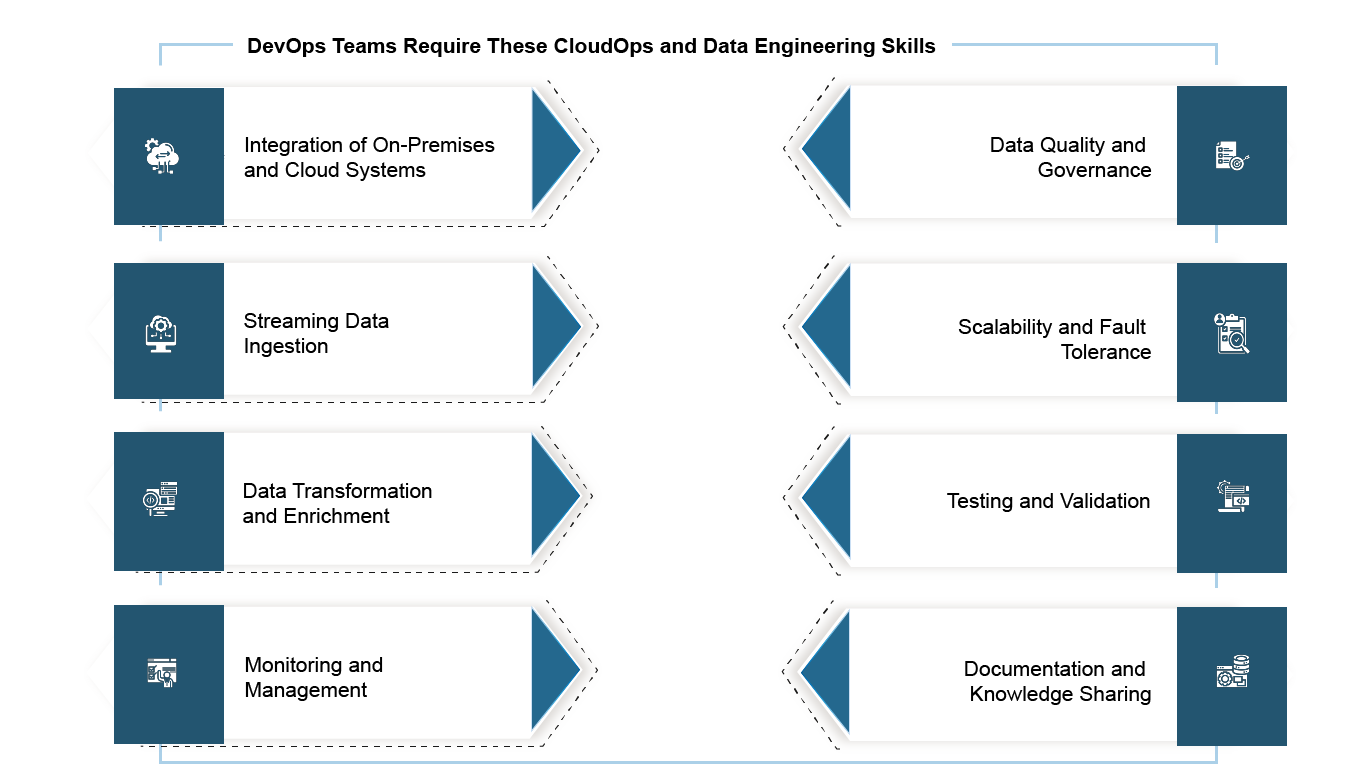

DevOps Engineers and Cloud Architects play an active role in planning and managing coding tasks. This ensures the developed code aligns with deployment requirements and business objectives. To do so, a skilled DevOps Cloud Architect and Data Engineer must have expertise in configuring streaming workflows from a hybrid cloud environment, where data is both on-premises and in the cloud.

When deploying applications, the team would need to be adept at using tools like Terraform and Ansible to define and manage infrastructure for any new release or for duplicating IT infrastructure at different branch locations. Prior to any software or new feature release, their expertise in using tools like Jenkins and GitLab CI will help the DevOps team to automate testing of code changes and catch issues early. This also requires hands-on experience in coding for performing conversions or refactoring when re-platforming codes from legacy systems like COBOL to Java & Angular.

Discover how No-Code API integrations are modernizing Legacy Messaging Infrastructure by integrating it with decoupled Automated Data Streams.

For example, for one of our banking clients, we enabled event driven data streaming for processes involving each of the following: Treasury Management, Lending & Underwriting, Guarantee Management, Information Security, and/or Regulatory Compliance.

Our Legacy Integration specialists are not only helping the bank identify the right data pipelines, but also launching new functionalities using No-Code API plugins.

Data Ingestion:

Data Transformation:

Data Storage:

Analytics and Visualization:

Monitoring and Management:

Security and Compliance:

Testing and Validation:

Documentation and Knowledge Sharing:

Real-Time Insights: Enables real-time data processing and analytics for timely decision-making.

Scalability: Scales to handle large volumes of data from diverse sources.

Cost Efficiency: Optimizes resource usage and minimizes operational costs.

Flexibility: Allows for seamless integration and data flow between on-premises and cloud environments.

Reliability: Ensures fault tolerance and high availability of streaming workflows.

By leveraging the expertise of data engineers, organizations can successfully configure and manage complex streaming workflows in a hybrid cloud environment. This enables them to harness the power of real-time data for actionable insights, improved operations, and better business outcomes.

Use this EBook to know how the world’s top legacy migration specialists are leveraging no-code technologies to enable legacy systems integration and automate data streams.

Qualify for a free consultation on the right application modernization strategy for your enterprise.